Older Posts3

Understanding AI Agent Security

AI agents are powerful but vulnerable.

What are the Security Risks of Deploying DeepSeek-R1?

Our red team analysis found DeepSeek-R1 fails 60%+ of harmful content tests.

1,156 Questions Censored by DeepSeek

Analysis of DeepSeek-R1 censorship using 1,156 political prompts exposing CCP content filtering and bias detection patterns.

How to Red Team a LangChain Application: Complete Security Testing Guide

LangChain apps combine multiple AI components, creating complex attack surfaces.

Defending Against Data Poisoning Attacks on LLMs: A Comprehensive Guide

Data poisoning attacks can corrupt LLMs during training, fine-tuning, and RAG retrieval.

Jailbreaking LLMs: A Comprehensive Guide (With Examples)

From simple prompt tricks to sophisticated context manipulation, discover how LLM jailbreaks actually work.

Beyond DoS: How Unbounded Consumption is Reshaping LLM Security

OWASP replaced DoS attacks with "unbounded consumption" in their 2025 Top 10.

Red Team Your LLM with BeaverTails

Evaluate LLM safety using BeaverTails dataset with 700+ harmful prompts spanning harassment, violence, and deception categories.

How to run CyberSecEval

Even top models fail 25-50% of prompt injection attacks.

Leveraging Promptfoo for EU AI Act Compliance

The EU AI Act bans specific AI behaviors starting February 2025.

How to Red Team an Ollama Model: Complete Local LLM Security Testing Guide

Running LLMs locally with Ollama? These models often bypass cloud safety filters.

How to Red Team a HuggingFace Model: Complete Security Testing Guide

Open source models on HuggingFace often lack safety training.

Introducing GOAT—Promptfoo's Latest Strategy

Meet GOAT: our advanced multi-turn jailbreaking strategy that uses AI attackers to break AI defenders.

RAG Data Poisoning: Key Concepts Explained

Attackers can poison RAG knowledge bases to manipulate AI responses.

Does Fuzzing LLMs Actually Work?

Traditional fuzzing fails against LLMs.

How Do You Secure RAG Applications?

RAG applications face unique security challenges beyond foundation models.

Prompt Injection: A Comprehensive Guide

Prompt injections are the most critical LLM vulnerability.

Understanding Excessive Agency in LLMs

When LLMs have too much power, they become dangerous.

Preventing Bias & Toxicity in Generative AI

Biased AI outputs can destroy trust and violate regulations.

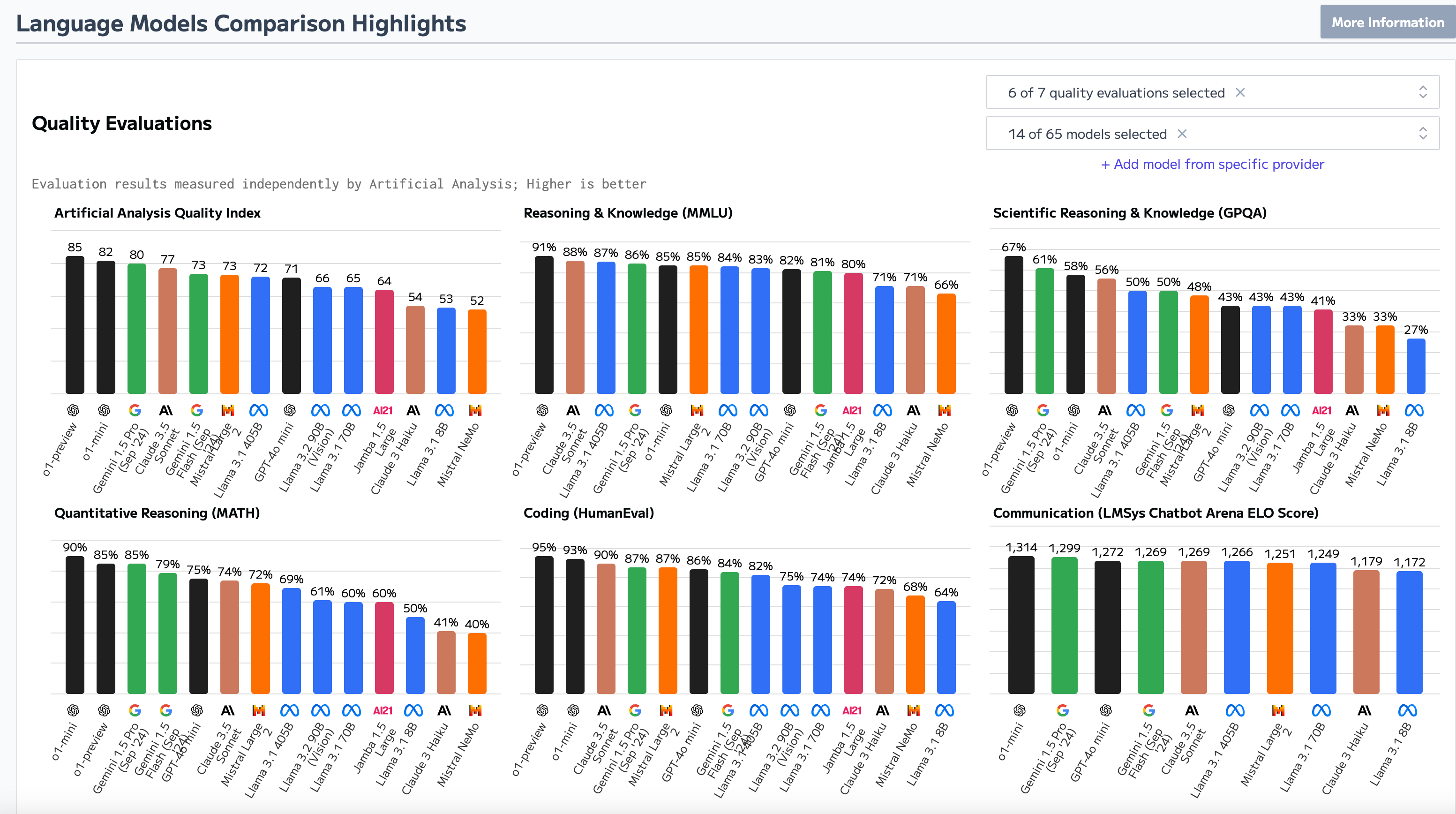

How Much Does Foundation Model Security Matter?

Not all foundation models are created equal when it comes to security.