Archive2

How to Red Team GPT: Complete Security Testing Guide for OpenAI Models

OpenAI's latest GPT models are more capable but also more vulnerable.

How to Red Team Claude: Complete Security Testing Guide for Anthropic Models

Claude is known for safety, but how secure is it really? Step-by-step guide to red teaming Anthropic's models and uncovering hidden vulnerabilities..

A2A Protocol: The Universal Language for AI Agents

Dive into the A2A protocol - the standardized communication layer that enables AI agents to discover, connect, and collaborate securely..

Inside MCP: A Protocol for AI Integration

A hands-on exploration of Model Context Protocol - the standard that connects AI systems with real-world tools and data.

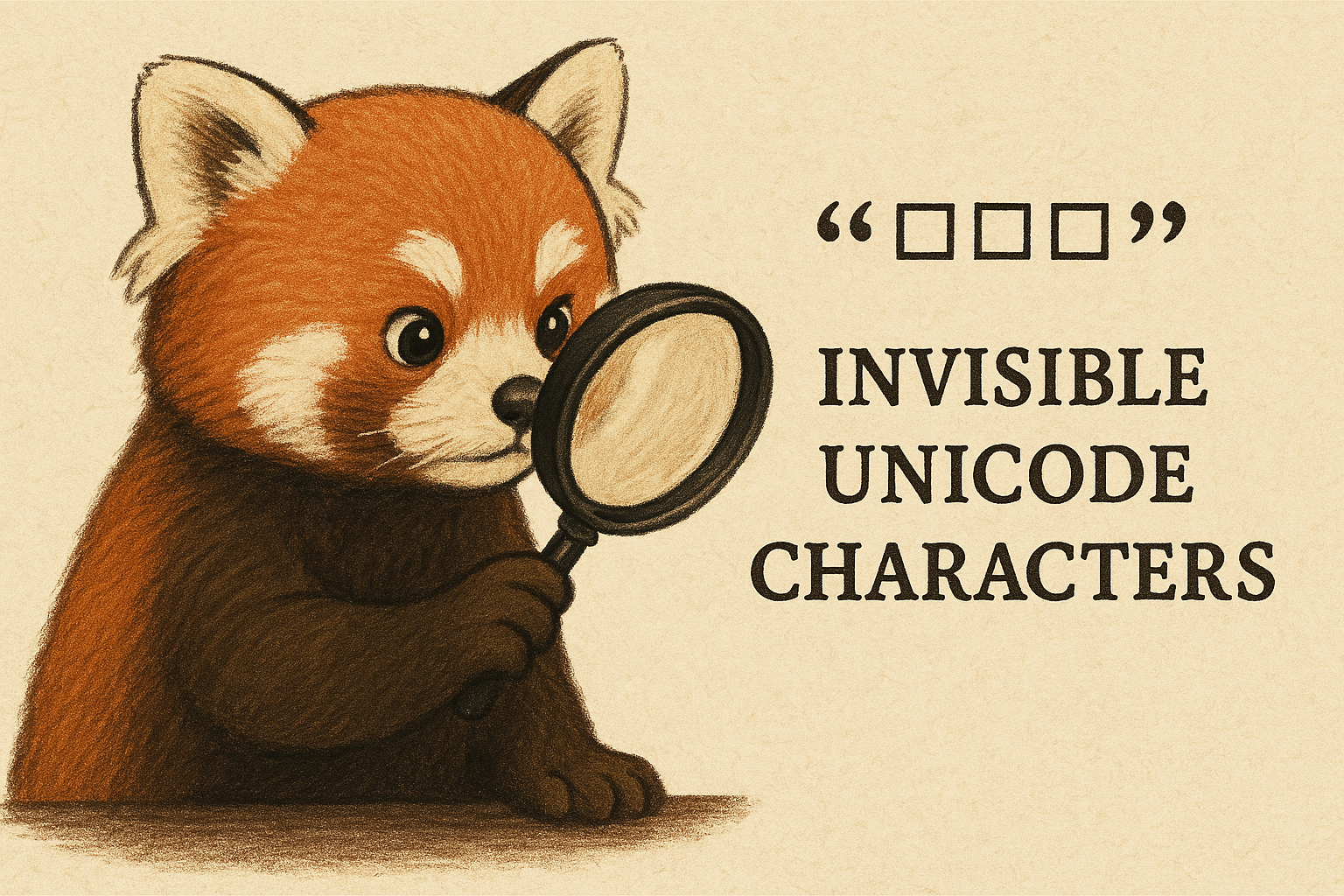

The Invisible Threat: How Zero-Width Unicode Characters Can Silently Backdoor Your AI-Generated Code

Explore how invisible Unicode characters can be used to manipulate AI coding assistants and LLMs, potentially leading to security vulnerabilities in your code..

OWASP Red Teaming: A Practical Guide to Getting Started

OWASP released the first official red teaming guide for AI systems.

Misinformation in LLMs: Causes and Prevention Strategies

LLMs can spread false information at scale.

Sensitive Information Disclosure in LLMs: Privacy and Compliance in Generative AI

LLMs can leak training data, PII, and corporate secrets.

Understanding AI Agent Security

AI agents are powerful but vulnerable.

What are the Security Risks of Deploying DeepSeek-R1?

Our red team analysis found DeepSeek-R1 fails 60%+ of harmful content tests.