Autonomy is the concept of self-governance—the freedom to decide without external control. Agency is the extent to which an entity can exert control and act.

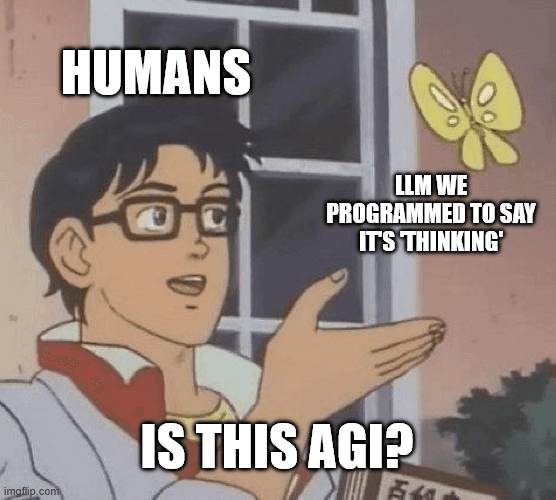

We have both as humans, and unfortunately LLMs would need both to have true artificial general intelligence (AGI). This means that the current wave of Agentic AI is likely to fizzle out instead of moving us towards the sci-fi future of our dreams (still a dystopia, might I add). Gartner predicts over 40% of agentic AI projects will be canceled by the end of 2027. Software tools must have business value, and if that value isn't high enough to outperform costs and the myriad of security risks introduced by those tools, they are rightfully axed.

Sorry.

I'll make one thing clear: AGI isn't on the horizon unless (until?) LLMs have human-level autonomy and agency, and are capable of human-level metacognition.

I'll make another thing clear: We're still deliberately trying to improve autonomy and agency in LLMs, so we should treat them with the same caution we would give any human.

I would rather speak of autonomy and agency pragmatically. Here are two truths and a lie:

- AI agents perform tasks on our behalf.

- AI systems behave unexpectedly.

- AI integration presents security risks.

I lied. They're all true.

Practically, it's more important to focus on consequences of using evolving AI technology instead of quibbling over whether AI systems have autonomy and/or agency. Or do both, if that floats your boat (I certainly understand someone enjoying a good quibble), but at least prioritize the former.

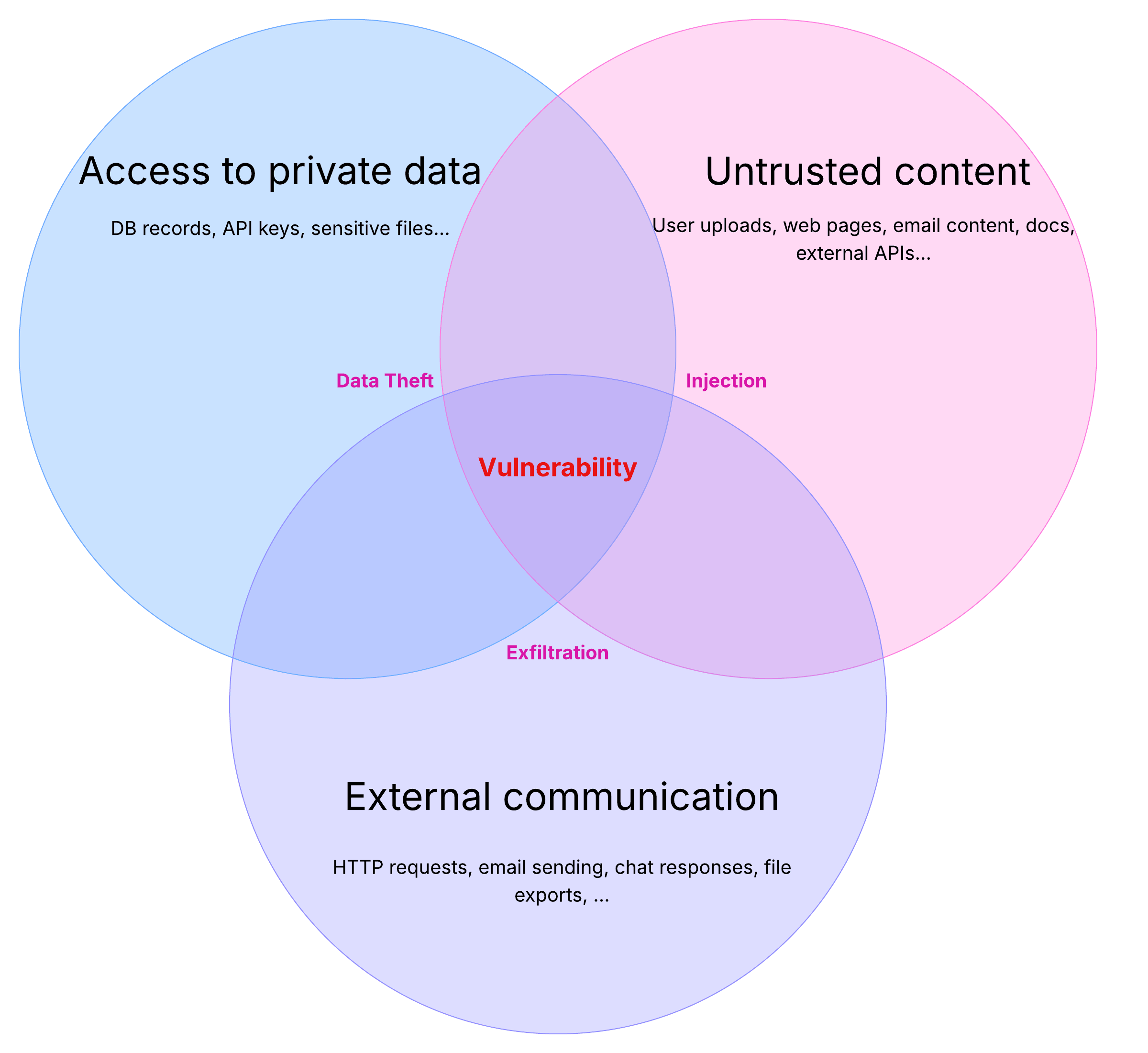

Let's get into the weeds of security concerns revolving around autonomy and agency in LLMs.