Catch issues that other review tools miss

Our scanner is laser-focused on the kinds of vulnerabilities that apps built on LLMs and agents are uniquely susceptible to.

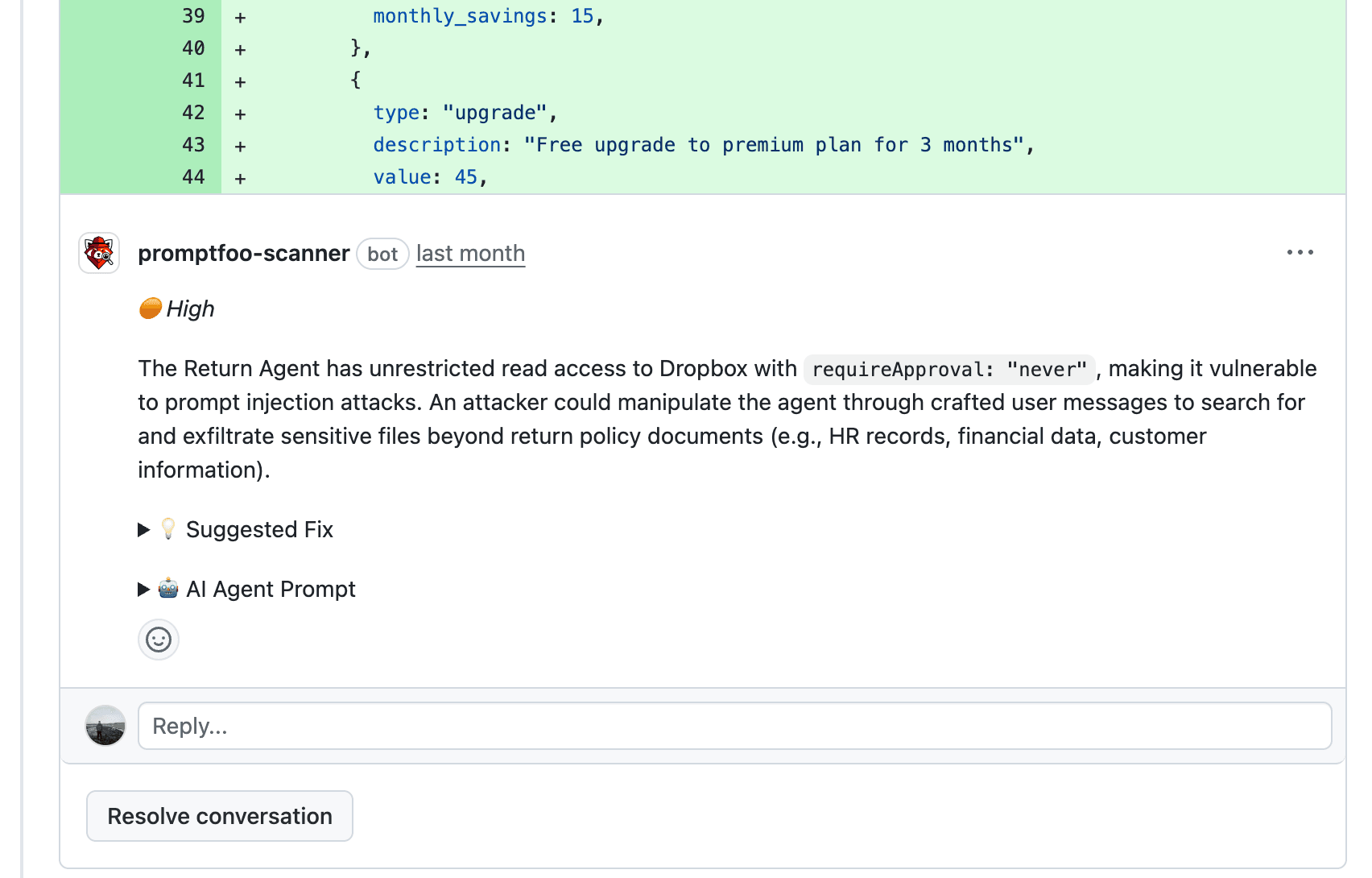

Prompt Injection

Untrusted input reaches LLM prompts without proper sanitization or boundaries.

Data Exfiltration

Indirect prompt injection vectors that could extract data through agent tools.

PII Exposure

Code that may leak sensitive user data to LLMs or log confidential information.

Improper Output Handling

LLM outputs used in dangerous contexts like SQL queries or shell commands.

Excessive Agency

LLMs with overly broad tool access or missing approval gates for actions.

Jailbreak Risks

Weak system prompts and guardrail bypasses that could allow harmful outputs.

See it in action

We tested the scanner against real CVEs in LangChain, Vanna.AI, and LlamaIndex. Read the technical deep dive to see how it catches vulnerabilities that other tools miss.

Real security that fits your workflow

Flag dangerous code without adding friction.

Deep tracing

Beyond the PR itself, the scanner agentically traces LLM inputs, outputs, and capability changes deep into the larger repository to identify subtle yet critical issues that human reviewers can struggle to catch.

No noise

Despite the comprehensive approach, it has a high bar for reporting, avoiding false positives and alert fatigue. Maintainers can configure severity levels and provide custom instructions to tailor sensitivity to their needs.

Fix suggestions

Every finding includes a suggested remediation, as well as a prompt that can be passed straight to an AI coding agent to further investigate and address the issue.

Start scanning PRs right now

No account, credit card, or API keys required.