Top Open Source AI Red-Teaming and Fuzzing Tools in 2025

Why are we red teaming AI systems?

If you're looking into red teaming AI systems for the first time and don't have context for red teaming, here's something I wrote for you.

The rush to integrate large language models (LLMs) into production applications has opened up a whole new world of security challenges. AI systems face unique vulnerabilities like prompt injections, data leakage, and model misconfigurations that traditional security tools just weren't built to handle.

Input manipulation techniques like prompt injections and base64-encoded attacks can dramatically influence how AI systems behave. While established security tooling gives us some baseline protection through decades of hardening, AI systems need specialized approaches to vulnerability management. The problem is, despite growing demand, relatively few organizations make comprehensive AI security tools available as open source.

If we want cybersecurity practices to take more of a foothold, particularly now that AI systems are becoming increasingly common, it's important to make them affordable and easy to use. Tools that sound intimidating and aren't intuitive will be less likely to change the culture surrounding cybersecurity-as-an-afterthought.

I spend a lot of time thinking about what makes AI red teaming software good at what it does. Feel free to skip ahead to the tool comparisons if you already know this stuff.

Summary: red teaming for AI security

AI red teaming is a proactive and systematic process that uncovers risks and security vulnerabilities in AI systems, preferably before they hit production. In the spirit of traditional red teaming exercises, it simulates adversarial attacks and stress-tests AI models under real-world conditions. The benefits are numerous, which include:

- Evaluation of whether AI models are compliant and adhere to legal, ethical, and safety standards.

- Regulatory bodies are evolving these to match emerging threats; it is easier to check compliance with tooling that supports industry standards.

- Improving fairness by uncovering biases in training data or decision-making processes.

- Third-party AI red teaming provides unbiased evaluations of AI systems.

- Ensuring AI systems are robust and not leaking any sensitive data.

Traditional software doesn't cater to the scale or specificity of AI model responses. As a result, exposing security risks can be time-consuming. Security teams are much better empowered with specialized AI security tooling.

In tandem with blue teaming goals

Red-teaming efforts exist to uncover security vulnerabilities so that blue team operations are well-informed to build a protected system and on where they should secure AI. Much like how traditional red teams simulate high-stress environments, automated AI red teaming models attacker behaviors to test AI systems' limits, and at scale. Developer teams still produce reports and evaluate findings so the information is useful.

A couple of the top priorities in AI security are to protect sensitive data and stay within the confines of appropriate role-based access controls; security vulnerabilities regarding these tend to do the most damage. What constitutes harmful behavior may vary between organizations.

Organizations must consider how to protect sensitive data and users throughout an entire AI system's lifecycle. All connection points need to be secured in order to reduce risk. The threat landscape can be large and often a red team process structured with scoping and strategy incorporates this to produce the best outcomes.

Why do they need to be open source?

They don't have to be, but proponents of open-source software favor them for common reasons:

- Transparency in how the tool operates.

- Often customizable.

- Cost-effectiveness.

- Trust in community-driven choices.

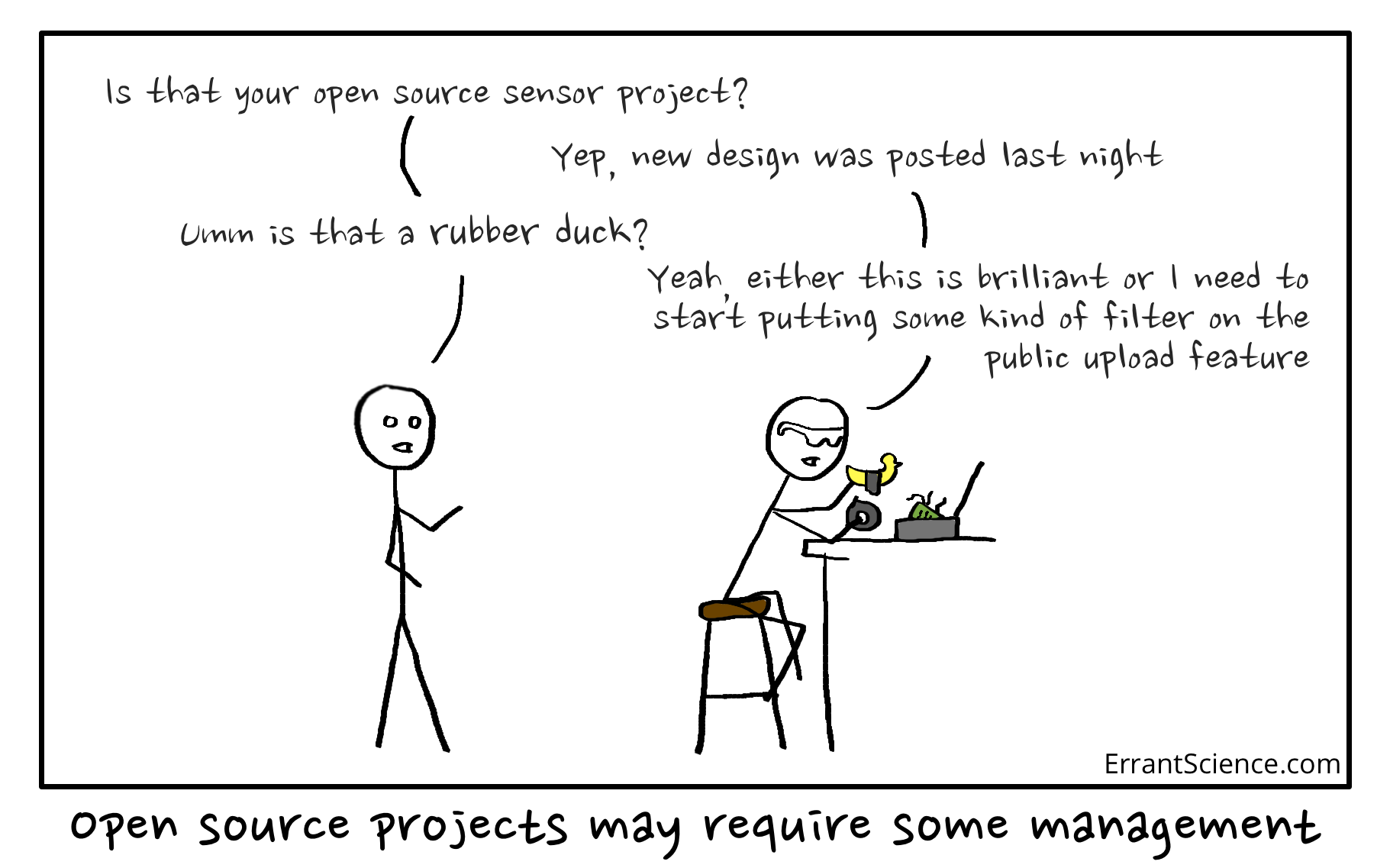

In order to encourage developers to participate in a better AI security culture and prioritize cybersecurity in their projects, making tools free to use and adapt is the first step towards making that goal actionable for developers. This specific market isn't exactly flooded with tools for AI red teaming, or AI security tools in general.

What makes a good AI red teaming tool?

Software engineers look for many features, and the core goal is to expose security vulnerabilities. At Promptfoo, we have seen needs grow from solo evals for small projects to comprehensive red teaming requirements for established products.

Stating the obvious

It should go without saying, but AI security tools with the following see greater adoption:

- Great developer experience (DX). UX is important for us nerds, too. It encourages adoption and reduces organizational friction.

- Great DX makes AI security more approachable even for someone who doesn't usually run them.

- Freedom to fork and customize software to a workflow without waiting for vendor updates.

Not all open-source tools are invested in improving their user experience; this is a byproduct of focusing on feature implementation without any design experience on the team.

AI Model agnosticism

A tool should work across major AI model providers and self-hosted models to:

- Avoid vendor lock-in for stack flexibility.

- Allow comparison of multiple AI models for a deeper understanding of their behaviors, given variable training data.

- Accommodate architectures with multiple models to test them uniformly.

- Reduce dependency on any single model according to governance or internal policies.

I've seen teams swap between models when they're frustrated with results, sometimes even going as far as to complain about inadequate outputs on forums. There's no reason this comparison process shouldn't be automated along with the rest of the security pipeline.

Customization (especially for AI testing)

Designing a test suite and scenarios encourages:

- Reflect real-world risks and run simulations tailored to a specific user base (for example, developer platforms see more technically sophisticated attacks, while student platforms may see plagiarism-evasion attempts).

- Keeping pace with emerging threats. A system supporting custom plugins and strategies means you don't have to wait for a software update to support it.

- Domain specificity: healthcare platforms require medical tests that are irrelevant for a financial platform or a general chatbot.

CI/CD and automation before deploying AI systems

More involved projects will run red teams regularly. Moving from mitigating risks to preventing them comes with:

- Make red teaming a proactive part of the deployment cycle before production.

- Catch regressions early; surprises happen.

- Treat red teaming like running tests; developers already run tools like Jest or pytest.

- Test-driven prompt engineering: refine prompts until they actually pass tests.

Test-driven development is the way of life for automated red teaming, my friend. If you haven't, it'll be time to finally embrace after putting it off for who knows how long you've been actively avoiding meaning to do it.

Output scoring and reporting

Auditing and reports are a common expectation of AI security in order to measure progress and compliance. Understanding outputs is naturally a part of the process. Useful software will help you:

- Prioritise issues and triage severity through scoring.

- Measure model robustness using quantitative scoring.

- Accommodate human audits and adjustments for legal, risk, and compliance reasons.

- Share clear reports with anyone - not just red teamers.

Prompt variation and context injection

The point of AI red teaming is to generate a variety of attacks. Tiny tweaks to prompt injections can bypass guards already in place. A great tool would support:

- Prompt phrasing changes.

- Evasive behavior simulation (such as formatting or emoji tricks).

- Multi-turn testing; some jailbreaks depend on a conversation of prompts rather than just one-shot.

- Mimicry of real-world adversarial behavior by malicious actors.

AI red teaming often revolves around prompts due to the problematic nature of variability caused by all the forms natural language can take. Top-notch AI security would involve the entire context in which artificial intelligence components sit, and not just anything directly related to the AI models themselves.

Red teaming traditionally involves an expert attacking a system using various methods like any user would. The manual process is still important to include on top of any routine testing; take it into account if you want maximum security for your AI system. Inspecting attack techniques and human tweaking is also advisable.

Also...

Aside from what's on the box, I've come to appreciate when software can grow with my project without overwhelming me; this means I can easily grow with it. Even better if I'm growing inwards instead of outwards.

After building software for over a decade rebuilding a part of a stack with a similar tool feels like a waste of time. I want to spend my time creating value, and the shininess of a new tool loses lustre quickly with new tool pains. I only want to replace it when it's no longer suitable, and the longer that takes the better.

Top open source AI red-teaming and fuzzing tools (2025)

Note: We build Promptfoo. We include competitors and link to their docs for balance.

What counts as red-teaming here: We focus on tools that actively generate adversarial attacks, not just evaluation frameworks or defensive guardrails. While evaluations are part of red-teaming workflows, we prioritize tools that expose vulnerabilities through active testing.

1. Promptfoo

Overview: Dev-first framework for AI red teaming and evals with flexible configuration, deep Python integration, and intuitive web UI. Features agent tracing, compliance mapping to OWASP, NIST, MITRE ATLAS, EU AI Act, plus comprehensive MCP testing capabilities.

Key features

- CLI and polished web interface with model-agnostic testing

- Deep Python integration alongside JavaScript/TypeScript support

- Flexible YAML/JSON configuration with programmatic APIs

- Agent tracing and debugging capabilities for complex workflows

- Multi-turn testing via plugins and attack strategies

- Adaptive red teaming: Smart AI agents generate context-specific attacks from the start, not static prompt lists

- Active community with extensive plugin ecosystem

- CI/CD integrations (GitHub Actions, CLI)

- Compliance mapping: OWASP, NIST, MITRE ATLAS, EU AI Act

- MCP testing and agent plugins for tool abuse scenarios

- Best For: Teams needing flexible, production-ready red-teaming with great developer experience

Promptfoo excels at red teaming production applications with its combination of flexibility and usability. The web UI makes results easy to share across teams, while the flexible configuration supports everything from simple tests to complex agent workflows. Strong community support means you're rarely stuck on implementation details.

The platform extends beyond basic model testing to cover entire AI pipelines, including agent workflows and MCP integrations. This comprehensive approach becomes essential as AI systems gain more capabilities and access to external tools.

2. PyRIT (Python Risk Identification Tool)

Overview: Microsoft's open automation framework for adversarial AI campaigns, developed by Microsoft's AI Red Team and now integrated into Azure AI Foundry. Excellent for programmatic multi-turn orchestration and custom attack scenarios.

Key features

- Multi-turn conversation orchestration with sophisticated attack chains

- Converters for audio, image, and mathematical transformations

- Extensive scoring engine with Azure Content Safety integration

- New: AI Red Teaming Agent in Azure AI Foundry (public preview 2025)

- Rich research-oriented architecture with detailed logging

- Best For: Security teams needing programmatic control and research-grade attack orchestration

Key differences: Promptfoo focuses on adaptive attack generation with smart AI agents, while PyRIT excels at programmatic orchestration with sophisticated converters and scoring engines. Both support multi-turn attacks, but PyRIT offers more granular control for research scenarios, while Promptfoo emphasizes ease of use and compliance mapping.

Context: Microsoft deserves significant credit for open-sourcing their internal AI Red Team tooling and continuing to invest in the open-source community. The Azure integration demonstrates their commitment to making enterprise-grade AI security accessible.

Links: Microsoft Blog, GitHub, Azure AI Foundry

3. Garak

Overview: NVIDIA's comprehensive AI vulnerability scanner that tests around 100 different attack vectors using up to 20,000 prompts per run. Originated by Leon Derczynski and now maintained under NVIDIA, with excellent AVID integration for community vulnerability sharing.

Key features

- Extensive probe library covering jailbreaks, injection, toxicity, hallucinations, and data leakage

- Multi-layered testing framework with static and dynamic analysis capabilities

- Support for 20+ AI platforms including OpenAI, Hugging Face, Cohere, NVIDIA NIMs, and local models

- Automated AVID report generation via

avidtoolsPython package - HTML reports with z-score grading and comprehensive coverage metrics

- Best For: Security teams needing broad vulnerability coverage with standardized reporting

Key differences: Promptfoo emphasizes adaptive attack generation and web-based workflows, while Garak provides comprehensive vulnerability scanning with both static and dynamic capabilities. Garak excels at broad coverage with its extensive probe library and AVID integration, whereas Promptfoo focuses on context-aware testing and compliance mapping.

Context: NVIDIA's stewardship has significantly enhanced Garak's capabilities and community adoption. Originally created by Leon Derczynski, Garak now benefits from NVIDIA's resources while maintaining its open-source nature. The AVID integration represents a model for shared threat intelligence.

Links: GitHub, Documentation, AVID Integration

4. FuzzyAI

Overview: CyberArk's automated AI fuzzing tool that specializes in jailbreak detection through advanced mutation and generation techniques. Unlike the targeted approaches of PyRIT or the probe-based scanning of Garak, FuzzyAI focuses on discovering unknown vulnerabilities through algorithmic variation.

Key features

- Advanced attack strategies: ArtPrompt (ASCII art), many-shot jailbreaking, crescendo attacks

- Genetic algorithm prompt modification and Unicode smuggling techniques

- Support for OpenAI, Anthropic, Gemini, Azure, Ollama, and custom REST APIs

- Web UI alongside CLI for visual fuzzing management

- Jupyter notebook examples for integration with research workflows

- Best For: Security teams discovering novel attack vectors through systematic fuzzing

Key differences: FuzzyAI specializes in discovering novel vulnerabilities through systematic fuzzing and genetic algorithms, while Promptfoo focuses on context-aware attack generation and compliance workflows. FuzzyAI's strength lies in mutation-based discovery, whereas Promptfoo emphasizes adaptive testing with policy mapping.

Links: GitHub

5. promptmap2

Overview: Focused prompt-injection scanner for your own system prompts. Dual-AI design runs targeted attacks and tells you if they succeeded. Good early-warning signal for app-specific risks.

Key features

- Purpose-built vulnerability scanning for prompt injection attacks

- Dual-AI architecture with dynamic testing of system prompts

- Single and multi-turn attack scenarios for conversational systems

- JSON and console output for integration with security pipelines

- Best For: Application security teams testing their own system prompts for injection vulnerabilities

Key differences: promptmap2 is laser-focused on prompt injection vulnerabilities with a specialized dual-AI approach, while Promptfoo provides broader red-teaming coverage. promptmap2 excels at detecting injection attacks in system prompts, whereas Promptfoo offers comprehensive testing across multiple attack vectors with compliance mapping.

Honorable mentions

Viper: Keep as a general red-team platform side note. A general adversary simulation platform with visual UI and multi-platform support, not AI-specific but includes AI-augmented operations for traditional security teams.

Woodpecker (Operant AI): Unified OSS engine for teams already running K8s and API red team exercises who want AI testing included. Broader than AI models but useful for comprehensive security posture. Links: GitHub, Help Net Security

Feature comparison table

| Tool | Focus Area | Attack Coverage | Multi-turn | Reports & Export | CI Support | Maintenance | License |

|---|---|---|---|---|---|---|---|

| Promptfoo | Red-teaming plus evals | Jailbreaks, injection, policy violations, MCP | ✅ Via plugins | HTML, policy-mapped reports | GitHub Actions, CLI | Active | MIT/Apache |

| PyRIT | Orchestration and scoring | Custom scenarios, multi-turn chains | ✅ Built-in | JSON logs, programmatic | Scripts, notebooks | Active | MIT |

| Garak | Probe scanning | Jailbreaks, injection, toxicity, hallucinations | ✅ Conversational | HTML with z-scores, AVID | CLI | Active | Apache 2.0 |

| FuzzyAI | Fuzzing | Mutation, generation-based attacks | ✅ Multi-strategy | CLI, experimental web UI | CLI | Active | Apache 2.0 |

| promptmap2 | Injection scanner | Prompt injection, system prompt vulnerabilities | ✅ Multi-turn | JSON, console output | CLI | Active | MIT |

Conclusion

Everyone has specific project requirements, and we're best served by open-source tools that do different things well. Hopefully I've shed some light on why one would pick one open-source red team tool over another.

May your efforts in securing AI be fruitful.

If you have any other questions, feel free to drop me a DM!